New Features in 11.0.0

Catalog Performance Improvements

There is a new Bacula database format (schema) in this version of Bacula that eliminates the FileName table by placing the Filename into the File record of the File table. This substantiallly improves performance, particularly for large databases.The update_xxx_catalog script will automatically update the Bacula database format, but you should realize that for very large databases (greater than 50GB), it may take some time and it will double the size of the database on disk during the migration.

This database format change can provide very significant improvements in the speed of metadata insertion into the database, and in some cases (backup of large email servers) can significantly reduce the size of the database.

Automatic TLS Encryption

Starting with Bacula 11.0, all daemons and consoles are now using TLS automatically for all network communications. It is no longer required to setup TLS keys in advance. It is possible to turn off automatic TLS PSK encryption using the TLS PSK Enable directive.

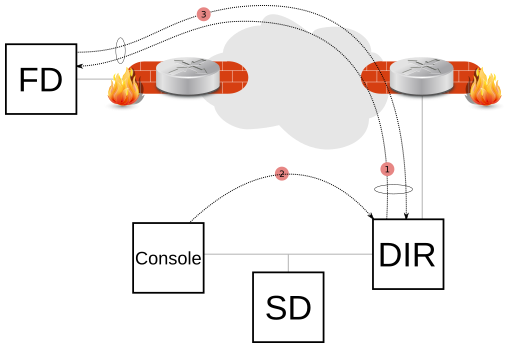

Client Behind NAT Support with the Connect To Director Directive

A Client can now initiate a connection to the Director (permanently or scheduled) to allow the Director to communicate to the Client when a new Job is started or a bconsole command such as status client or estimate is issued.

This new network configuration option is particularly useful for Clients that are not directly reachable by the Director.

# cat /opt/bacula/etc/bacula-fd.conf

Director {

Name = bac-dir

Password = aigh3wu7oothieb4geeph3noo # Password used to connect

# New directives

Address = bac-dir.mycompany.com # Director address to connect

Connect To Director = yes # FD will call the Director

}

# cat /opt/bacula/etc/bacula-dir.conf

Client {

Name = bac-fd

Password = aigh3wu7oothieb4geeph3noo

# New directive

Allow FD Connections = yes

}

It is possible to schedule the Client connenction at certain periods of the day:

# cat /opt/bacula/etc/bacula-fd.conf

Director {

Name = bac-dir

Password = aigh3wu7oothieb4geeph3noo # Password used to connect

# New directives

Address = bac-dir.mycompany.com # Director address to connect

Connect To Director = yes # FD will call the Director

Schedule = WorkingHours

}

Schedule {

Name = WorkingHours

# Connect the Director between 12:00 and 14:00

Connect = MaxConnectTime=2h on mon-fri at 12:00

}

Note that in the current version, if the File Daemon is started after 12:00, the next connection to the Director will occur at 12:00 the next day.

A Job can be scheduled in the Director around 12:00, and if the Client is connected, the Job will be executed as if the Client was reachable from the Director.

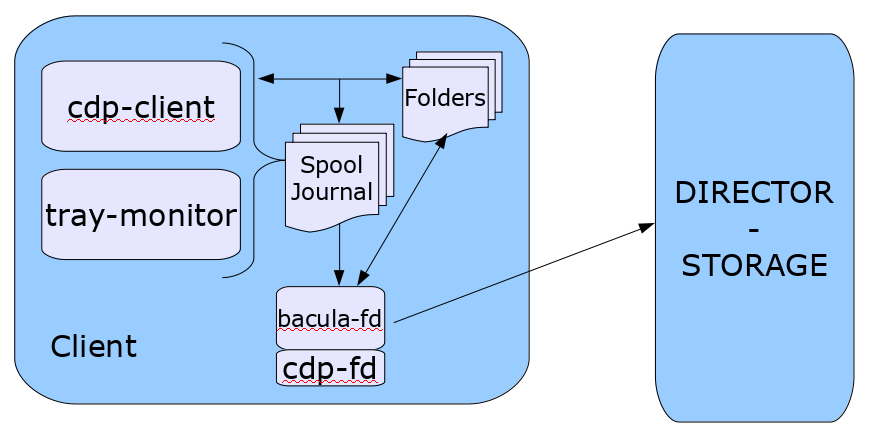

Continuous Data Protection Plugin

Continuous Data Protection (CDP), also called continuous backup or real-time backup, refers to backup of Client data by automatically saving a copy of every change made to that data, essentially capturing every version of the data that the user saves. It allows the user or administrator to restore data to any point in time.

The Bacula CDP feature is composed of two components: An application (cdp-client or tray-monitor) that will monitor a set of directories configured by the user, and a Bacula FileDaemon plugin responsible to secure the data using Bacula infrastructure.

The user application (cdp-client or tray-monitor) is responsible for monitoring files and directories. When a modification is detected, the new data is copied into a spool directory. At a regular interval, a Bacula backup job will contact the FileDaemon and will save all the files archived by the cdp-client. The locally copied data can be restored at any time without a network connection to the Director.

See the CDP (Continious Data Protection) chapter (here) for more information.

Global Autoprune Control Directive

The Director Autoprune directive can now globally control the Autoprune feature. This directive will take precedence over Pool or Client Autoprune directives.

Director {

Name = mydir-dir

...

AutoPrune = no # switch off Autoprune globally

}

Event and Auditing

The Director daemon can now record events such as:

- Console connection/disconnection

- Daemon startup/shutdown

- Command execution

- ...

The events may be stored in a new catalog table, to disk, or sent via syslog.

Messages {

Name = Standard

catalog = all, events

append = /opt/bacula/working/bacula.log = all, !skipped

append = /opt/bacula/working/audit.log = events, !events.bweb

}

Messages {

Name = Daemon

catalog = all, events

append = /opt/bacula/working/bacula.log = all, !skipped

append = /opt/bacula/working/audit.log = events, !events.bweb

append = /opt/bacula/working/bweb.log = events.bweb

}

The new message category “events” is not included in the default configuration files by default.

It is possible to filter out some events using "!events." form. It is possible to specify 10 custom events per Messages resource.

All event types are recorded by default.

When stored in the catalog, the events can be listed with the “list events” command.

* list events [type=<str> | limit=<int> | order=<asc|desc> | days=<int> |

start=<time-specification> | end=<time-specification>]

+---------------------+------------+-----------+--------------------------------+

| time | type | source | event |

+---------------------+------------+-----------+--------------------------------+

| 2020-04-24 17:04:07 | daemon | *Daemon* | Director startup |

| 2020-04-24 17:04:12 | connection | *Console* | Connection from 127.0.0.1:8101 |

| 2020-04-24 17:04:20 | command | *Console* | purge jobid=1 |

+---------------------+------------+-----------+--------------------------------+

The .events command can be used to record an external event. The source recorded will be recorded as “**source**”. The events type can have a custom name.

* .events type=baculum source=joe text="User login"

New Prune Command Option

The prune jobs all command will query the catalog to find all combinations of Client/Pool, and will run the pruning algorithm on each of them. At the end, all files and jobs not needed for restore that have passed the relevant retention times will be pruned.

The prune command prune jobs all yes can be scheduled in a RunScript to prune the catalog once per day for example. All Clients and Pools will be analyzed automatically.

Job {

...

RunScript {

Console = "prune jobs all yes"

RunsWhen = Before

failjobonerror = no

runsonclient = no

}

}

Dynamic Client Address Directive

It is now possible to use a script to determine the address of a Client when dynamic DNS option is not a viable solution:

Client {

Name = my-fd

...

Address = "|/opt/bacula/bin/compute-ip my-fd"

}

The command used to generate the address should return one single line with a valid address and end with the exit code 0. An example would be

Address = "|echo 127.0.0.1"

This option might be useful in some complex cluster environments.

Volume Retention Enhancements

The Pool/Volume parameter Volume Retention can now be disabled to never prune a volume based on the Volume Retention time. When Volume Retention is disabled, only the Job Retention time will be used to prune jobs.

Pool {

Volume Retention = 0

...

}

Windows Enhancements

- Support for Windows files with non-UTF16 names.

- Snapshot management has been improved, and a backup job now relies exclusively on the snapshot tree structure.

- Support for the system.cifs_acl extended attribute backup with Linux CIFS has been added. It can be used to backup Windows security attributes from a CIFS share mounted on a Linux system. Note that only recent Linux kernels can handle the system.cifs_acl feature correctly. The FileSet must use the XATTR Support=yes option, and the CIFS share must be mounted with the cifsacl options. See mount.cifs(8) for more information.

GPFS ACL Support

The new Bacula FileDaemon supports the GPFS filesystem specific ACL. The GPFS libraries must be installed in the standard location. To know if the GPFS support is available on your system, the following commands can be used.

*setdebug level=1 client=stretch-amd64-fd Connecting to Client stretch-amd64-fd at stretch-amd64:9102 2000 OK setdebug=1 trace=0 hangup=0 blowup=0 options= tags= *st client=stretch-amd64-fd Connecting to Client stretch-amd64-fd at stretch-amd64:9102 stretch-amd64-fd Version: 11.0.0 (01 Dec 2020) x86_64-pc-linux-gnu-bacula debian 9.11 Daemon started 21-Jul-20 14:42. Jobs: run=0 running=0. Ulimits: nofile=1024 memlock=65536 status=ok Heap: heap=135,168 smbytes=199,993 max_bytes=200,010 bufs=104 max_bufs=105 Sizes: boffset_t=8 size_t=8 debug=1 trace=0 mode=0,2010 bwlimit=0kB/s Crypto: fips=no crypto=OpenSSL 1.0.2u 20 Dec 2019 APIs: GPFS Plugin: bpipe-fd.so(2)

The APIs line will indicate if the /usr/lpp/mmfs/libgpfs.so was loaded at the start of the Bacula FD service or not.

The standard ACL Support (cf (here)) directive can be used to enable automatically the support for the GPFS ACL backup.

New Baculum Features

Multi-user interface improvements

There have been added new functions and improvements to the multi-user interface and restricted access.

The Security page has new tabs:

- Console ACLs

- OAuth2 clients

- API hosts

These new tabs help to configure OAuth2 accounts, create restricted Bacula Console for users and create API hosts. They ease the process of creating users with a restricted Bacula resources access.

Add searching jobs by filename in the restore wizard

In the restore wizard now is possible to select job to restore by filename of file stored in backups. There is also possible to limit results to specific path.

Show more detailed job file list

The job file list now displays file details like: file attributes, UID, GID, size, mtime and information if the file record for saved or deleted file.

Add graphs to job view page

On the job view page, new pie and bar graphs for selected job are available.

Implement graphical status storage

On the storage status page are available two new types of the status (raw and graphical). The graphical status page is modern and refreshed asynchronously.

Add Russian translations

Global messages log window

There has been added new window to browse Bacula logs in a friendly way.

Job status weather

Add the job status weather on job list page to express current job condition.

Restore wizard improvements

In the restore wizard has been added listing and browsing names encoded in non-UTF encoding.

New API endpoints

- /oauth2/clients

- /oauth2/clients/client_id

- /jobs/files

New parameters in API endpoints

- /jobs/jobid/files - 'details' parameter

- /storages/show - 'output' parameter

- /storages/storageid/show - 'output' parameter